Trading predictions using AI and Python

We’ve explored various methodologies for predicting the movements of stock prices, including the utilization of forecasting tools such as Facebook’s Prophet, statistical methods like the Seasonal Autoregressive Integrated Moving-Average (SARIMA) model, machine learning strategies such as Polynomial Regression, and ultimately, an AI-based recurrent neural network (RNN).

Amongst a plethora of AI models and techniques, we’ve discovered that the Long Short-Term Memory (LSTM) model yields the most favorable outcomes. An LSTM model, a variant of recurrent neural network architectures, is adept at handling sequence prediction challenges. Contrary to conventional feedforward neural networks, the LSTM boasts a memory-like structure enabling it to preserve contextual data across extensive sequences. This feature renders it exceptionally suited for time-series forecasting, natural language processing, and other tasks reliant on sequence data. It addresses the fundamental shortcomings of standard RNNs by mitigating vanishing and exploding gradient issues, thus facilitating the model’s ability to recognize long-term dependencies within the dataset. Consequently, LSTMs have become the go-to option for intricate assignments necessitating an in-depth comprehension of data over prolonged durations.

To demonstrate its efficacy, we’ve developed a proof of concept.

Preparation Steps:

- Install the latest Python and PIP.

- Create a Python project with a “main.py” file.

- Add a “data” directory in the project.

- Set up and activate a virtual environment.

trading-ai-lstm $ python3 -m venv venv

trading-ai-lstm $ source venv/.bin/activate

(venv) trading-ai-lstm $- Create a “requirements.txt” file.

pandas

numpy

scikit-learn

scipy

matplotlib

tensorflow

eodhd

python-dotenv- Make sure you have upgraded PIP within your virtual environment and install the dependencies.

(venv) trading-ai-lstm $ pip install --upgrade pip

(venv) trading-ai-lstm $ python3 -m pip install -r requirements.txtWe have included our EODHD API’s API key into a “.env” file.

API_TOKEN=<YOUR_API_KEY_GOES_HERE>That should be all set. If you’re utilizing VSCode and wish to employ the same “.vscode/settings.json” file as ours, here it is.

{

"python.formatting.provider": "none",

"python.formatting.blackArgs": ["--line-length", "160"],

"python.linting.flake8Args": [

"--max-line-length=160",

"--ignore=E203,E266,E501,W503,F403,F401,C901"

],

"python.analysis.diagnosticSeverityOverrides": {

"reportUnusedImport": "information",

"reportMissingImports": "none"

},

"[python]": {

"editor.defaultFormatter": "ms-python.black-formatter"

}

}Here’s a GitHub repository for this project in case you need guidance.

Building up the code

The first step is you will want to import the necessary libraries.

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "1"

import pickle

import pandas as pd

import numpy as np

from dotenv import load_dotenv

from sklearn.metrics import mean_squared_error, mean_absolute_error

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense, Dropout

from tensorflow.keras.models import load_model

from sklearn.preprocessing import MinMaxScaler

import matplotlib.pyplot as plt

from eodhd import APIClientTensorFlow often generates an abundance of warnings and debug details by default. We prefer a cleaner and more orderly output, hence we suppress these notifications. This is achieved by a specific line using os.environ following the import of the “os” module.

The process of training Machine Learning and AI models entails considerable fine-tuning, primarily managed through what is known as hyperparameters. This subject is intricate and mastering it is somewhat of an art form. The choice of optimal hyperparameters is influenced by various factors. Drawing from the daily S&P 500 data we obtain via the EODHD API, we’ve initiated with some widely recognized settings. You’re encouraged to modify these to enhance the outcomes. For the moment, it’s advisable to maintain the sequence length at 20.

# Configurable hyperparameters

seq_length = 20

batch_size = 64

lstm_units = 50

epochs = 100The next step involves retrieving your EODHD API’s API_TOKEN from our “.env” file.

# Load environment variables from the .env file

load_dotenv()

# Retrieve the API key

API_TOKEN = os.getenv("API_TOKEN")

if API_TOKEN is not None:

print(f"API key loaded: {API_TOKEN[:4]}********")

else:

raise LookupError("Failed to load API key.")Ensure you possess a valid EODHD API’s API_TOKEN to successfully access the data.

We’ve established several reusable functions and will detail their functions as we employ them further below. Comments are included within these functions to clarify their operations.

def get_ohlc_data(use_cache: bool = False) -> pd.DataFrame:

ohlcv_file = "data/ohlcv.csv"

if use_cache:

if os.path.exists(ohlcv_file):

return pd.read_csv(ohlcv_file, index_col=None)

else:

api = APIClient(API_TOKEN)

df = api.get_historical_data(

symbol="HSPX.LSE",

interval="d",

iso8601_start="2010-05-17",

iso8601_end="2023-10-04",

)

df.to_csv(ohlcv_file, index=False)

return df

else:

api = APIClient(API_TOKEN)

return api.get_historical_data(

symbol="HSPX.LSE",

interval="d",

iso8601_start="2010-05-17",

iso8601_end="2023-10-04",

)

def create_sequences(data, seq_length):

x, y = [], []

for i in range(len(data) - seq_length):

x.append(data[i : i + seq_length])

y.append(data[i + seq_length, 3]) # The prediction target "close" is the 4th column (index 3)

return np.array(x), np.array(y)

def get_features(df: pd.DataFrame = None, feature_columns: list = ["open", "high", "low", "close", "volume"]) -> list:

return df[feature_columns].values

def get_target(df: pd.DataFrame = None, target_column: str = "close") -> list:

return df[target_column].values

def get_scaler(use_cache: bool = True) -> MinMaxScaler:

scaler_file = "data/scaler.pkl"

if use_cache:

if os.path.exists(scaler_file):

# Load the scaler

with open(scaler_file, "rb") as f:

return pickle.load(f)

else:

scaler = MinMaxScaler(feature_range=(0, 1))

with open(scaler_file, "wb") as f:

pickle.dump(scaler, f)

return scaler

else:

return MinMaxScaler(feature_range=(0, 1))

def scale_features(scaler: MinMaxScaler = None, features: list = []):

return scaler.fit_transform(features)

def get_lstm_model(use_cache: bool = False) -> Sequential:

model_file = "data/lstm_model.h5"

if use_cache:

if os.path.exists(model_file):

# Load the model

return load_model(model_file)

else:

# Train the LSTM model and save it

model = Sequential()

model.add(LSTM(units=lstm_units, activation='tanh', input_shape=(seq_length, 5)))

model.add(Dropout(0.2))

model.add(Dense(units=1))

model.compile(optimizer="adam", loss="mean_squared_error")

model.fit(x_train, y_train, epochs=epochs, batch_size=batch_size, validation_data=(x_test, y_test))

# Save the entire model to a HDF5 file

model.save(model_file)

return model

else:

# Train the LSTM model

model = Sequential()

model.add(LSTM(units=lstm_units, activation='tanh', input_shape=(seq_length, 5)))

model.add(Dropout(0.2))

model.add(Dense(units=1))

model.compile(optimizer="adam", loss="mean_squared_error")

model.fit(x_train, y_train, epochs=epochs, batch_size=batch_size, validation_data=(x_test, y_test))

return model

def get_predicted_x_test_prices(x_test: np.ndarray = None):

predicted = model.predict(x_test)

# Create a zero-filled matrix to aid in inverse transformation

zero_filled_matrix = np.zeros((predicted.shape[0], 5))

# Replace the 'close' column of zero_filled_matrix with the predicted values

zero_filled_matrix[:, 3] = np.squeeze(predicted)

# Perform inverse transformation

return scaler.inverse_transform(zero_filled_matrix)[:, 3]

def plot_x_test_actual_vs_predicted(actual_close_prices: list = [], predicted_x_test_close_prices = []) -> None:

# Plotting the actual and predicted close prices

plt.figure(figsize=(14, 7))

plt.plot(actual_close_prices, label="Actual Close Prices", color="blue")

plt.plot(predicted_x_test_close_prices, label="Predicted Close Prices", color="red")

plt.title("Actual vs Predicted Close Prices")

plt.xlabel("Time")

plt.ylabel("Price")

plt.legend()

plt.show()

def predict_next_close(df: pd.DataFrame = None, scaler: MinMaxScaler = None) -> float:

# Take the last X days of data and scale it

last_x_days = df.iloc[-seq_length:][["open", "high", "low", "close", "volume"]].values

last_x_days_scaled = scaler.transform(last_x_days)

# Reshape this data to be a single sequence and make the prediction

last_x_days_scaled = np.reshape(last_x_days_scaled, (1, seq_length, 5))

# Predict the future close price

future_close_price = model.predict(last_x_days_scaled)

# Create a zero-filled matrix for the inverse transformation

zero_filled_matrix = np.zeros((1, 5))

# Put the predicted value in the 'close' column (index 3)

zero_filled_matrix[0, 3] = np.squeeze(future_close_price)

# Perform the inverse transformation to get the future price on the original scale

return scaler.inverse_transform(zero_filled_matrix)[0, 3]

def evaluate_model(x_test: list = []) -> None:

# Evaluate the model

y_pred = model.predict(x_test)

mse = mean_squared_error(y_test, y_pred)

mae = mean_absolute_error(y_test, y_pred)

rmse = np.sqrt(mse)

print(f"Mean Squared Error: {mse}")

print(f"Mean Absolute Error: {mae}")

print(f"Root Mean Squared Error: {rmse}")One aspect we’d like to underscore is the inclusion of a “use_cache” variable in various functions. This strategy aims to reduce unnecessary API calls to EODHD API and avoid the redundant retraining of the model with identical daily data. Activating the “use_cache” variable allows for the data to be saved to files within the “data/” directory. Should the data be non-existent, it will be generated; if already present, it will be loaded. This approach enhances efficiency significantly when the script is executed multiple times. To procure fresh data with each run, simply deactivate the “use_cache” option when the function is invoked or clear the files in the “data/” directory, achieving the same outcome.

We now proceed to the core of the code…

if __name__ == "__main__":

# Retrieve 3369 days of S&P 500 data

df = get_ohlc_data(use_cache=True)

print(df)Initially, we acquire our OHLCV data from EODHD API and deposit it into a Pandas DataFrame named “df”. OHLCV signifies Open, High, Low, Close, and Volume, which are standard attributes for trading candle data. As mentioned earlier, caching is enabled to streamline the process. Optionally, we also facilitate the display of this data on the screen.

We’ll cover the following code block in one go…

features = get_features(df)

target = get_target(df)

scaler = get_scaler(use_cache=True)

scaled_features = scale_features(scaler, features)

x, y = create_sequences(scaled_features, seq_length)

train_size = int(0.8 * len(x)) # Create a train/test split of 80/20%

x_train, x_test = x[:train_size], x[train_size:]

y_train, y_test = y[:train_size], y[train_size:]

# Re-shape input to fit lstm layer

x_train = np.reshape(x_train, (x_train.shape[0], seq_length, 5)) # 5 features

x_test = np.reshape(x_test, (x_test.shape[0], seq_length, 5)) # 5 features- “features” comprise a list of inputs we will employ to forecast our target, namely “close”.

- “target” encompasses a list of the target values, such as “close”.

- “scaler” represents a method utilized to normalize figures, rendering them comparable. For instance, our dataset might commence with a close value of 784 and conclude with 3538. The higher figure in the last row doesn’t inherently signify greater significance for prediction purposes. Normalization ensures comparability.

- “scaled_features” are the outcomes of this scaling process, which we will use to train our AI model.

- “x_train” and “x_test” denote the datasets we will use for training and testing our AI model, respectively, with an 80/20 split being a common practice. This means 80% of our trading data is allocated for training, and 20% is reserved for testing the model. The “x” indicates these are the features or inputs.

- “y_train” and “y_test” function similarly but contain only the target values, such as “close”.

- Lastly, the data must be reshaped to suit an LSTM layer’s requirements.

We have developed a function to either train the model anew or load a previously trained model.

model = get_lstm_model(use_cache=True)

The image displayed offers a glimpse into the training sequence. You’ll observe that initially, the “loss” and “val_loss” metrics may not align closely. However, as training proceeds, these figures are expected to converge, indicating progress.

- Loss: This is the mean squared error (MSE) computed on the training dataset. It reflects the “cost” or “error” between predicted and true labels for each training epoch. The goal is to reduce this figure through successive epochs.

- Val_loss: This mean squared error, determined on the validation dataset, measures the model’s performance on data it hasn’t encountered during training. It serves as an indicator of the model’s capability to generalize to new, unseen data.

If you want to see a list of the predicted close prices on the test set, you can use this code.

predicted_x_test_close_prices = get_predicted_x_test_prices(x_test)

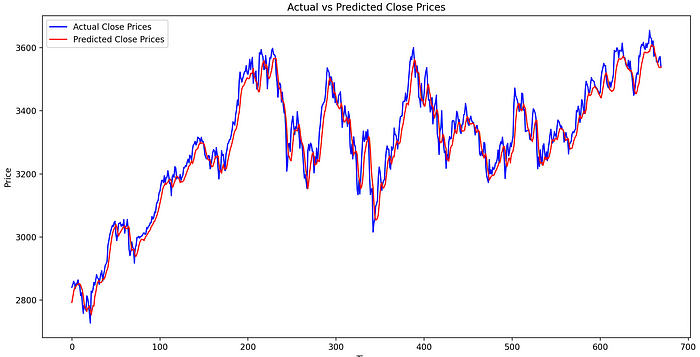

print("Predicted close prices:", predicted_x_test_close_prices)On its own, the data may not be particularly illuminating or straightforward to visualize. However, by plotting the actual closing prices against the predicted closing prices (keeping in mind this represents 20% of the entire dataset), we get a clearer picture as shown below.

# Plot the actual and predicted close prices for the test data

plot_x_test_actual_vs_predicted(df["close"].tail(len(predicted_x_test_close_prices)).values, predicted_x_test_close_prices)

The results illustrate that the model has performed commendably in predicting the closing price during the testing phase.

Now, moving to the aspect likely most anticipated: Can we determine the predicted closing price for tomorrow?

# Predict the next close price

predicted_next_close = predict_next_close(df, scaler)

print("Predicted next close price:", predicted_next_close)

Predicted next close price: 3536.906685638428This serves as a basic example for educational purposes and marks just the beginning. Proceeding from here, you might consider incorporating additional training data, tweaking the hyperparameters, or applying the models to various markets and time intervals.

If you want to evaluate the model you can include this.

# Evaluate the model

evaluate_model(x_test)Which in our scenario is…

Mean Squared Error: 0.00021641664334765608

Mean Absolute Error: 0.01157513692221611

Root Mean Squared Error: 0.014711106122506767The `mean_squared_error` and `mean_absolute_error` functions, sourced from scikit-learn’s metrics module, are used to calculate the Mean Squared Error (MSE) and Mean Absolute Error (MAE), respectively. The Root Mean Squared Error (RMSE) is derived by taking the square root of MSE.

These metrics offer a numerical assessment of the model’s accuracy, whereas the graphical representation aids in visually comparing the predicted values against the actual figures.

These metrics offer a quantitative evaluation of the model’s performance, while the plot helps in visually comparing the predicted values against the actual figures.

Please note that this article is for informational purposes only and should not be taken as financial advice. We do not bear responsibility for any trading decisions made based on the content of this article. Readers are advised to conduct their own research or consult with a qualified financial professional before making any investment decisions.

For those eager to delve deeper into such insightful articles and broaden their understanding of different strategies in financial markets, we invite you to follow our account and subscribe for email notifications.

Stay tuned for more valuable articles that aim to enhance your data science skills and market analysis capabilities.